By Robert L. Oprisko, Kirstie L. Dobbs, and Joseph DiGrazia

Graduate school is a period of apprenticeship that aspiring academics must pass through before they may enter the professoriate. When selecting a graduate program, prospective students may factor in institutional and departmental rank, geography, the financial cost/benefit analysis, the appeal of professors’ research, and opportunities specific to the program. There is some debate as to whether U.S. News and World Report’s dominant ranking system, which is largely based on a broad reputational survey, is helpful or part of the problem in higher education today. Malcolm Gladwell recently argued, for instance, that ranking for preference is subjective and that responses premised on reputation are biased. Gladwell notes that when the former chief justice of the Michigan supreme court surveyed a group of lawyers, Penn State University received a middling score for its law school even though at the time of the survey it had no law school.

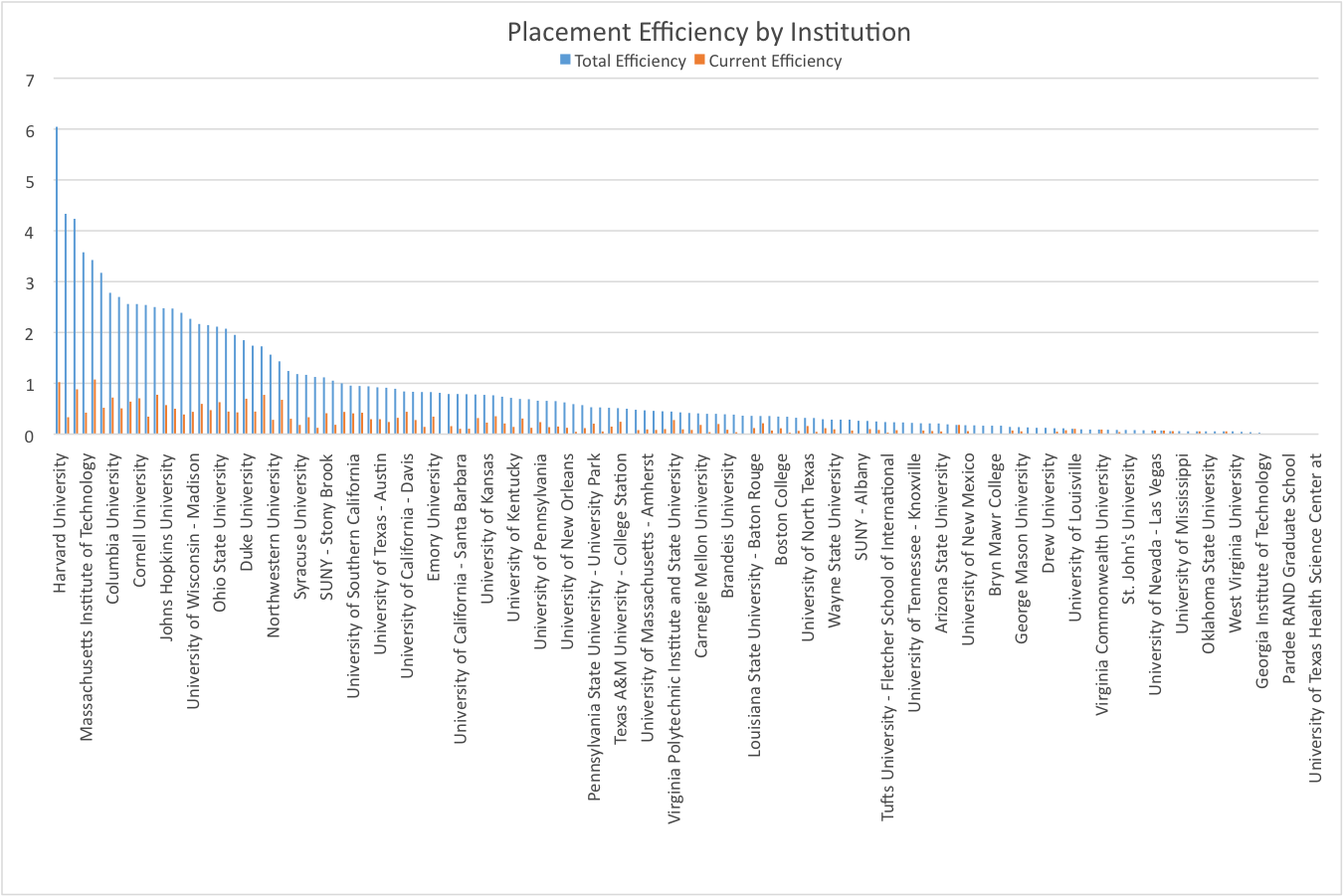

On August 22, President Obama gave a speech in Buffalo, New York that called for the creation of new ranking metrics for higher education predicated on practical assessment of performative success of graduates. Thus far, data on Ph.D. placement has been opaque, and what students do not know can hurt them. We have shown that where one attends graduate school plays a critical role in competitive advantage for employment as an academic, and that universities often hire from the same prestigious universities, but benefit from those hires disproportionately. We join the ranks of Washington Monthly to offer President Obama a new metric, placement efficiency, for ranking graduate programs using his performative criteria.

Placement efficiency measures the number of tenured and tenure-track placements of Ph.D.-graduates per tenured and tenure-track faculty at a given Ph.D.-granting institution. We believe that the focus on tenured and tenure-track appointments provides both the most accurate control for the size of a department as well as the most appropriate operationalization of placement. Contingent faculty have no job security and it would be inappropriate to consider them either as a mark of successful professional placement for the Ph.D.-granting program, or a long-term human resource investment by the employer institution. The academy does not monetarily value contingent faculty as highly as tenured and tenure-track faculty (Stephen Saideman refers to contingent faculty as second-class or third-class citizens) regardless of evidence that shows they are better teachers. We, therefore, believe it would be inappropriate to inflate institutional performance statistics with alienated labor.

We have chosen to utilize a data set that focuses solely on placement in Ph.D.-granting programs because we believe that this gives the clearest picture of competitive-advantage across these peer institutions. Our focus is on 3,709 tenured and tenure-track professors at 136 research-intensive universities that political scientists cite as their Ph.D.-granting alma mater. Our narrow selection is by design so as not to compare institutions with different missions because we are interested in performative variables, not proxies. Therefore, we consider this an intersectional study to determine the relative advantage of the upper echelons of political science education. We do, however, recognize that universities place students within the professoriate in national liberal arts colleges, regional universities, regional colleges, and community colleges. We assert that where one is employed should not define a scholar’s brilliance, diminish or enhance their perceived contribution to a discipline, or affect their access to success.

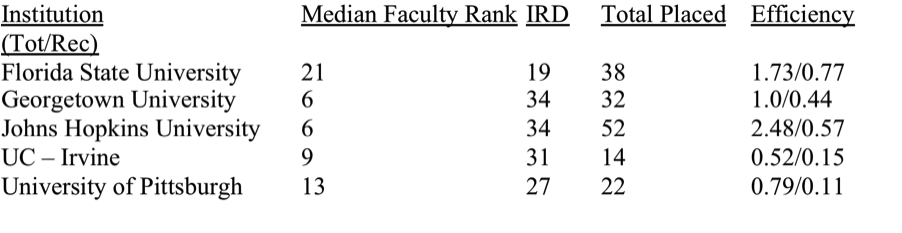

Placement efficiency provides a very clear line of having achieved institutional peer status. A placement efficiency score of 1.0, the score of Georgetown University, shows that a program has placed as many Ph.D.-graduates within the R1 academy as it employs. Placement efficiency is, therefore, a metric that controls for the size of a department when assessing placement. Placement efficiency scores above 1.0 indicate that a program enjoys a larger network, which has been linked to departmental prestige. We have previously shown the importance of prestige in academic hiring. Scores below 1.0 indicate that the program, and its graduates, are not valued as highly on the academic marketplace and do not enjoy large networks.

The U.S. News’ methodology is merely reflective (asking two academics per Ph.D.-granting program to rate all Ph.D.-granting departments using a five-point scale) and does not explain or rationalize changes in institutional rank over time. Placement and placement efficiency, as metrics for programmatic and institutional ranking, provide a clear subsection using Assistant Professors that embody recent market activity (typically the past 6 years), which can be used to indicate changing trends in the perceived quality of programs and the employability of their students. For instance, Florida State University is ranked 40th by U.S. News but has a current efficiency (0.77) that is only exceeded by Stanford University (1.08), Harvard University (1.02), UC-Berkeley (0.88), and UCLA (0.78); all of which U.S. News has in the top ten political science programs. Also, Georgia State University appears to be on the rise, with a recent efficiency score (0.08) that is two-thirds the size of their total efficiency score (0.12).

Placement efficiency is a powerful metric for assessing Ph.D.-granting programs both for prospective students and for faculty evaluators. Its precision is, perhaps, most visible when juxtaposed against the weakness of U.S. News’ rankings. There are five programs ranked #40 by U.S. News, which suggests some form of parity. Using our new metrics, however, provides greater detail:

These five programs do not appear to have the similarity that U.S. News suggests, having given each the same score in its rankings. With our metric, we see these programs are quite dissimilar. Georgetown and Johns Hopkins appear to be hiring faculty from the same institutions given the median faculty rank and IRD scores. Hopkins has the larger network with 52 placements and a total efficiency of 2.48. Georgetown’s recent efficiency score of 0.44, however, is larger proportionately to its total efficiency score and much closer to Hopkins’, suggesting the program is gaining momentum. Florida State’s small Institutional Ranking Difference (IRD) score, a metric derived by subtracting an institution’s rank from the average rank of it’s faculty’s Ph.D.-granting alma maters, suggests it is unconcerned with chasing prestige and is doing well, especially in placing recent graduates; the FSU faculty are adding value to their program and marketability to their students. Both UC-Irvine and Pittsburgh fall short in comparison.

Students can and should look at these scores to determine the level of risk in graduating from a particular institution knowing that the academic market is flooded. Universities can use these metrics when making decisions on the viability of programs and their leadership. Pittsburgh, for example, may want to reverse course on its diminishing ability to place students at R1 programs. A radical and unlikely, though interesting, possibility would be for academic consortiums to band together rather than to face irrelevance. For example, the Southeastern Conference (SEC) has 300 faculty at fourteen member institutions, but has only placed 111 students in R1 positions. It would benefit all member institutions for the SEC to require a strategic hiring initiative that would give preference to candidates from other SEC schools. This may appear as mercantilist behavior, but these programs are not currently able to find success on the general market. The state of Alabama, for example, may feel its graduates have been particularly and unduly ignored as it has only placed two scholars (of 3,709) within Ph.D.-granting institutions (one from Auburn, the other from Alabama-Tuscaloosa). Through an academic alliance, SEC members would, at the very least, enjoy increased prestige and network size by valuing each other’s programs and intellectual production. On average, in the unlikely event that all fourteen programs placed evenly and only within the SEC, they would each place 21.43 graduates, greater than Texas A&M, the SEC member with the highest placement (21).

Our hope is that the evaluation of academic institutions and programs by placement, rather than merely reputation, catalyzes a change in focus from “we employ faculty from the most prestigious schools” to “our graduates are employed within the professoriate, even at the most prestigious schools” within the academy. Perhaps this will incentivize departments to specialize in order to increase their reputation for producing the best scholars of that particular field or focus. Perhaps this will also motivate peer institutions to cultivate value in one another. What is likely, however, is that aspiring graduate students will begin to ignore programs that do not place well, tying the fate of academic programs, including faculty, to the success of their students.

Robert L. Oprisko is a Visiting Professor at Butler University, Kirstie Lynn Dobbs is a PhD candidate in Political Science at Loyola University – Chicago, and Joseph DiGrazia is a PhD candidate in Sociology at Indiana University. Butler University research assistants Nathaniel Vaught, Needa Malik, and Kate Trinkle also contributed to the research in this article. This is the second article in a series on hiring practices in higher education. Other articles in the series can be found here and here.

Established in 1995, the Georgetown Public Policy Review is the McCourt School of Public Policy’s nonpartisan, graduate student-run publication. Our mission is to provide an outlet for innovative new thinkers and established policymakers to offer perspectives on the politics and policies that shape our nation and our world.

3 thoughts on “Placement Efficiency: An Alternative Ranking Metric for Graduate Schools”

Comments are closed.