While statistics has contributed to scientific advancements, the origins of this discipline are surprisingly dark. What is the place of ethics in statistical analysis?

In 1930, the British statistician Ronald Fisher published a book entitled The Genetical Theory of Natural Selection, which argued, among other topics, that women are naturally attracted to men whose genes are best-suited for “reproductive success.” No doubt, to a modern reader, Fisher’s “sexy son hypothesis” seems peculiar. It may not be surprising to learn that Fisher was a devoted eugenicist.

Fisher also profoundly influenced modern statistics. For example, he developed the F-test, which was named in his honor, the analysis of variance (ANOVA) test, the concept of variance (he also coined the term “variance”), and the concept of a null hypothesis. Two other prominent eugenicists, Francis Galton and Karl Pearson, developed other basic statistical theories, such as regression analysis, the p-value, and the chi-squared test. Galton sought a means of measuring “the mental peculiarities of different races.” Pearson wrote societies advance “by way of war with inferior races.”

Given the emerging gold-standard status of statistical analysis in social sciences in general and public policy in particular, students, researchers, and policymakers should critically examine the origins and ethics of the tools they regularly use. To be clear, this article does not seek to discredit statistical analysis, an invaluable research method. Rather, this article scrutinizes the connection between eugenics and statistics and how it might inform the ethics of quantitative research methods.

Eugenics and Policy

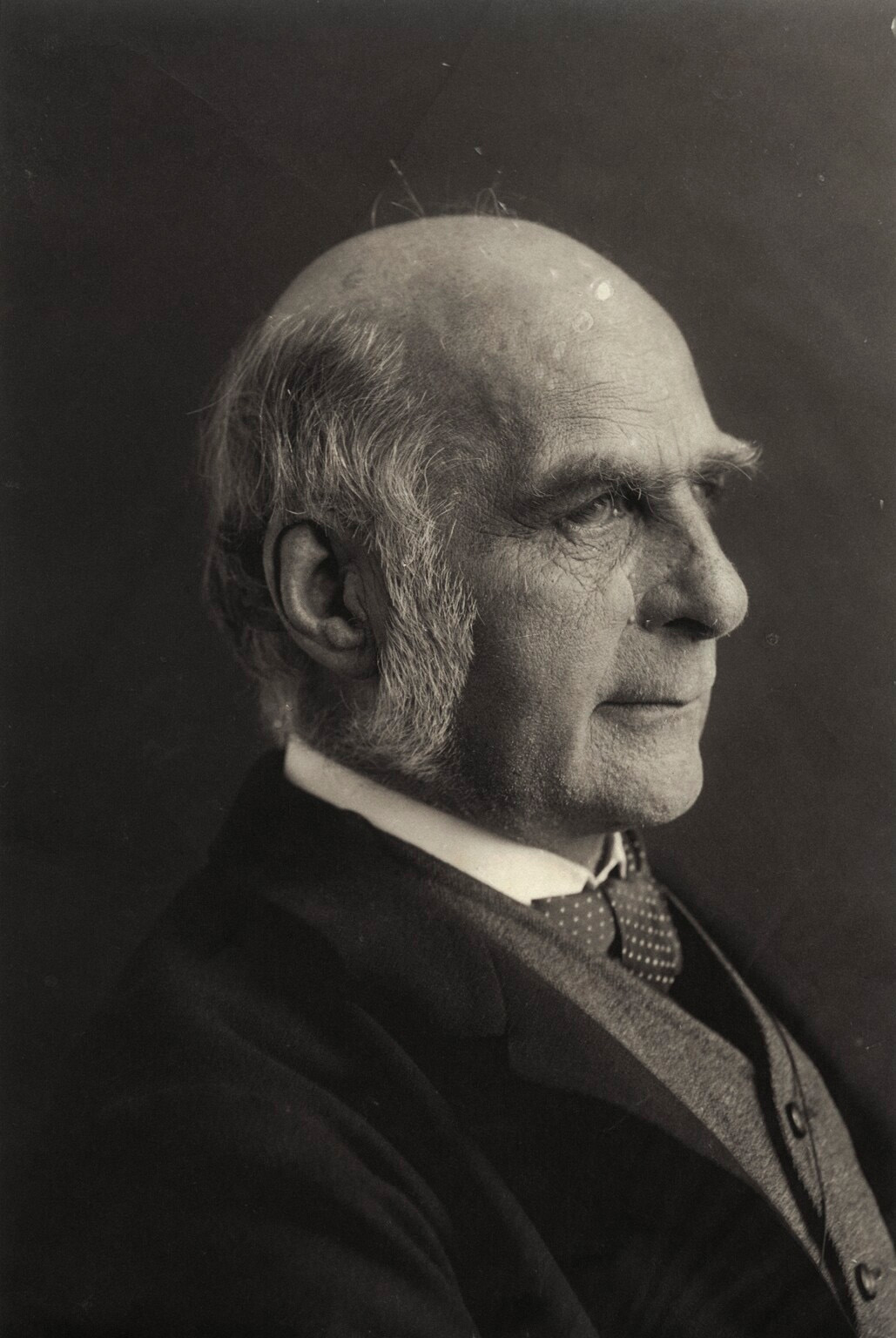

Francis Galton, a British social scientist, coined the term “eugenics” in the late 19th century to advocate a scientific movement that aimed to apply genetics and heredity to strengthening the “human race.” Eugenicists like Galton believed genetics determined characteristics like intelligence, physical fitness, and even “criminality.” For instance, Galton’s first study in eugenics claimed members of England’s upper classes possessed superior intelligence due to heredity.

American chicken breeder, Charles Davenport, brought eugenics to the United States. He founded the Eugenics Record Office (ERO) in Long Island, New York. It collected hundreds of thousands of records from families, hospitals, and prisons to determine the hereditability of “undesirable” traits. Eugenics rapidly gained popularity and even made its way into the principles of the 1880-1930 U.S. progressive movement, which formed in response to the problems of industrialization. One of the most prominent progressivists, founder of the National Committee on Federal Legislation for Birth Control (a precursor to Planned Parenthood) Margaret Sanger, advocated birth control to “assist the race toward the elimination of the unfit.”

Eugenics also influenced U.S. policy. Throughout the 20th century, 32 of the U.S. states had adopted sterilization laws based on eugenics principles, enabling the forced sterilization of about 60,000 Americans. Lawmakers used eugenics to justify the passage of the Immigration Act of 1924, which greatly restricted immigration from Eastern Europe and prohibited immigration from Asia. Disturbingly, in 1925, U.S. sterilization and immigration laws won praise from Adolf Hitler.

During the 1930s, eugenics began to lose popularity in the United States, though a small group of scientists continued publishing works advocating eugenics policies and research. As they found a waning audience in the United States and eugenics came to be considered pseudoscience, they began circulating in Germany, helping develop early Nazi research and sterilization programs.

The Eugenics Legacy

It is deeply unsettling to think that the methods in which policy analysts invest so much faith emerged as part of this dark chapter in history. One may be tempted to brush off the racist beliefs of Galton, Fisher, Pearson, and other eugenicists as separate from the scientific advances they made. However, there is evidence their beliefs were closely tied to their work. Eileen Magnello traces Pearson’s eugenics motivations for pursuing development of statistical methodologies. Similarly, Francisco Louçã examines how eugenics ideologies motivated the scientific research of Galton, Pearson, and Fisher.

A more productive question is why statistical analysis so appealed to eugenicists seeking to validate their ideas.

One possible answer is statistical significance, a concept policy students must learn well. In practical terms, statistical significance can provide evidence for the existence of an association while saying nothing about its nature or magnitude. For example, statistical significance indicates there is some relationship between rising ice cream sales and increasing crime rates, but not whether one causes the other or by how much. Eugenicists first developed and championed the concept because they were more concerned with correlation than impact or morality. Deirdre McCloskey and Steve Ziliak’s 2016 book, The Cult of Statistical Significance, shows the centrality of statistical significance in the minds of eugenicist researchers.

Another statistical concept eugenicists were fixated on was variation. During Galton’s time, a Belgian astronomer named Adolphe Quatelet discovered that measurement errors cluster around a true value, what became known as the “bell-curve.” Quatelet revered the true value as an ideal “average man.” However, Galton saw the errors as important variation that could lead to the evolution of “superior” traits. This view motivated him to develop two of the most important tools for modern statisticians: correlation and regression. Galton coined the term “regression toward mediocrity,” his theory that exceptional physical traits, such as being eight feet tall, generally regressed toward inferior average traits across generations. However, applying the ideas of his half-cousin, Charles Darwin, Galton proposed that exceptional traits can drive changes in the population average when they occur. The statistical tools he created were meant to help predict the exceptional traits in the service of finding ways to promote them. His ultimate goal was to find out how best to breed people to inherit superior intelligence.

While these advances in methodology may in themselves be positive, they have contributed to controversial policy prescriptions. For example, to this day, public and private education teachers fit grades to a bell curve as a means of distinguishing the exceptional from the average. In her book Keeping Track, Jeannie Oakes traces the development of grading systems in the United States and how social Darwinism, a field closely related to eugenics, influenced it. Standardized testing arguably continues this tradition through, for instance, the SAT and Advanced Placement (AP) tests, which were originally conceived to exclude people of color based on perceived notions of racial inferiority.

The Ethics of Quantitative Methods

Notwithstanding the considerable impact of eugenicists on statistics and certain policies, one might still point out the redemption of statistics through its advancement. Indeed, statisticians have largely reconceptualized ideas like variation and normality, ostensibly leaving behind the advancements of the eugenicists. However, researchers, especially in the field of public policy still rely heavily on significance, regression analysis, and other eugenicist-made methods. The inventors of these methods used their creations to advocate inhumane policies like forced sterilization. Schools continue using eugenics-inspired grading and testing systems. Writing off the eugenicists as long-dead is missing an important opportunity to reflect on the ethics of quantitative methods.

Policy schools can start a conversation about this by teaching ethics. For those like the McCourt School that already do, this subject should be a centerpiece of the curriculum. It should also feature in the curricula of statistics courses, which may consider including a class or two on the philosophy of science to ground students of quantitative research methods in ethics from the start.

The history of statistics should not invalidate this important discipline, but it should serve as a constant reminder of the danger of applying evidence without ethical considerations. It cautions against putting excessive faith in a single aspect of statistics, such as significance, while ignoring other important factors, like impact. One should not dismiss statistical evidence, but rather maintain constant awareness of its limitations.

It is crucial for students and practitioners in policy to have a strong quantitative base. Equally important is ethical grounding, gained not only through broad, theoretical philosophy classes, but also candid confrontation with the disturbing history of our discipline.

Photo is Francis Galton from Wikimedia Commons

Ido Levy is the editor-in-chief of Georgetown Public Policy Review. He is a second-year MPP student at the McCourt School of Public Policy. He earned a BA in government from Israel’s IDC Herzliya. He has completed research work for the International Institute for Counter-Terrorism, Institute for National Security Studies, Council on Foreign Relations, and Consortium for the Study of Terrorism and Responses to Terrorism. After graduating, he hopes to enter the security research world through a think tank or academia.